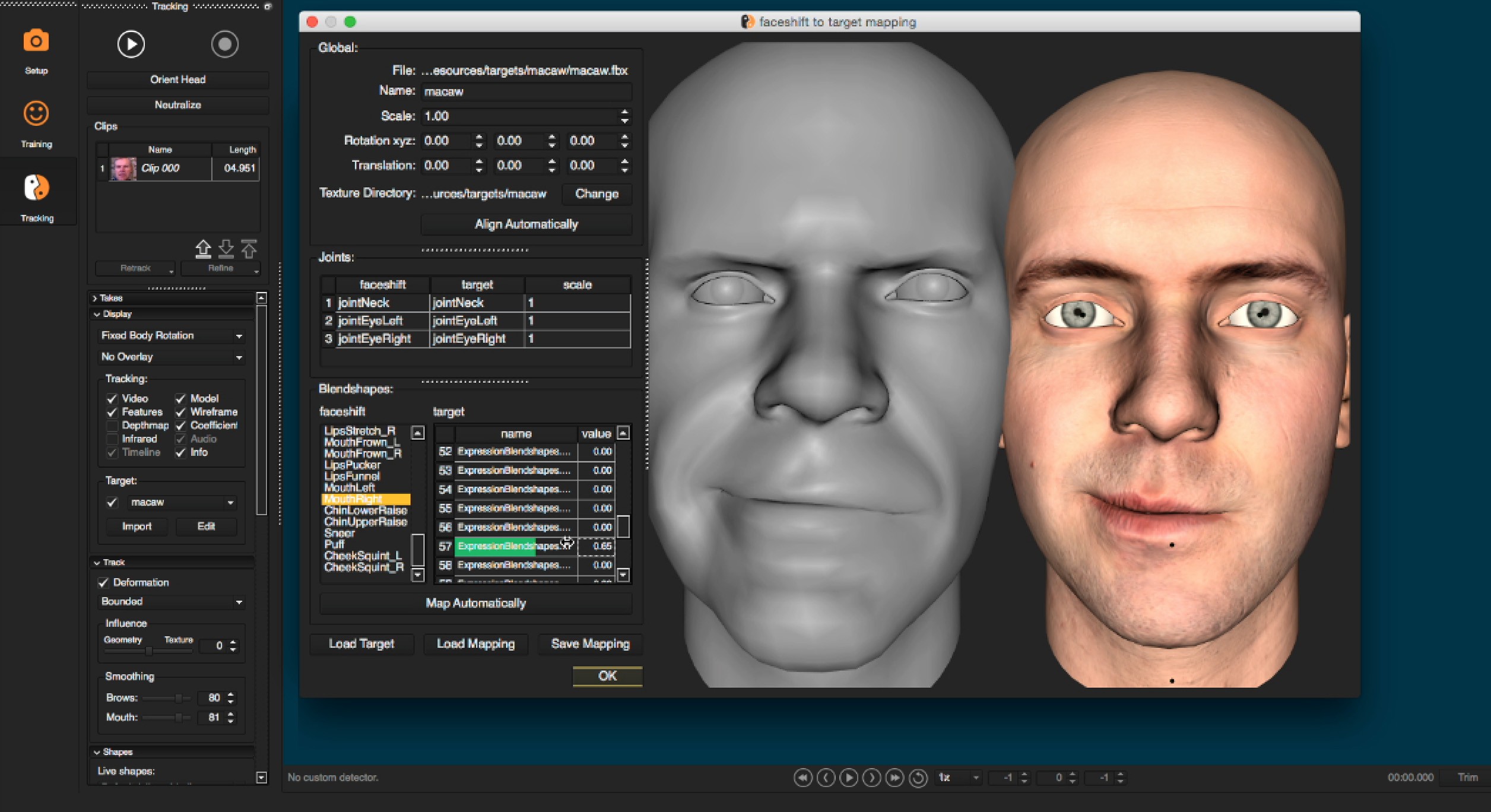

Extensive experiments on wild faces demonstrate that our face swapping results are not only considerably more perceptually appealing, but also better identity preserving in comparison to other state-of-the-art methods. It is trained to recover anomaly regions in a self-supervised way without any manual annotations. To address the challenging facial occlusions, we append a second stage consisting of a novel Heuristic Error Acknowledging Refinement Network (HEAR-Net). Touch device users, explore by touch or with swipe gestures. We propose a novel attributes encoder for extracting multi-level target face attributes, and a new generator with carefully designed Adaptive Attentional Denormalization (AAD) layers to adaptively integrate the identity and the attributes for face synthesis. When autocomplete results are available use up and down arrows to review and enter to select. The time-varying activation data are sampled at one sample per second and the magnitude of activation is normalized between the values of 0 and 1. The faceshift studio tracks and records the magnitude of different distinctive facial actions. Unlike many existing face swapping works that leverage only limited information from the target image when synthesizing the swapped face, our framework, in its first stage, generates the swapped face in high-fidelity by exploiting and integrating the target attributes thoroughly and adaptively. Facial action units 6, 12, and 15 may be useful targets to probe autism related traits.

It is a play on the mirror symmetry of the human face, where each side of the face is. In this work, we propose a novel two-stage framework, called FaceShifter, for high fidelity and occlusion aware face swapping. Realtime driving directions to FaceShift LLC The Palace, W Poinsett St, 703, Greer, based on live traffic updates and road conditions from Waze fellow. Face Shift is an experiment in algorithmic facial choreography.

0 kommentar(er)

0 kommentar(er)